Module 5: Ethics, Confidentiality, and Professional Lesson 5.3

Module 5: Ethics, Confidentiality, and Professional Responsibility Lesson 5.3: Bias, Fairness, and Reliability in Legal AI

Learning Objectives

By the end of this lesson, learners should be able to:

- Define bias in artificial intelligence and identify different forms of bias relevant to legal workflows.

- Explain how AI systems may unintentionally reinforce systemic inequities within legal and judicial processes.

- Evaluate fairness standards and determine when a system’s outputs raise ethical or constitutional concerns.

- Assess the reliability of AI-generated legal outputs, including the risks of hallucinated content or flawed reasoning.

- Apply professional responsibility requirements to ensure AI usage aligns with duties of competence, diligence, fairness, and justice.

- Implement practical safeguards to mitigate bias and improve the reliability of AI-supported legal decision-making.

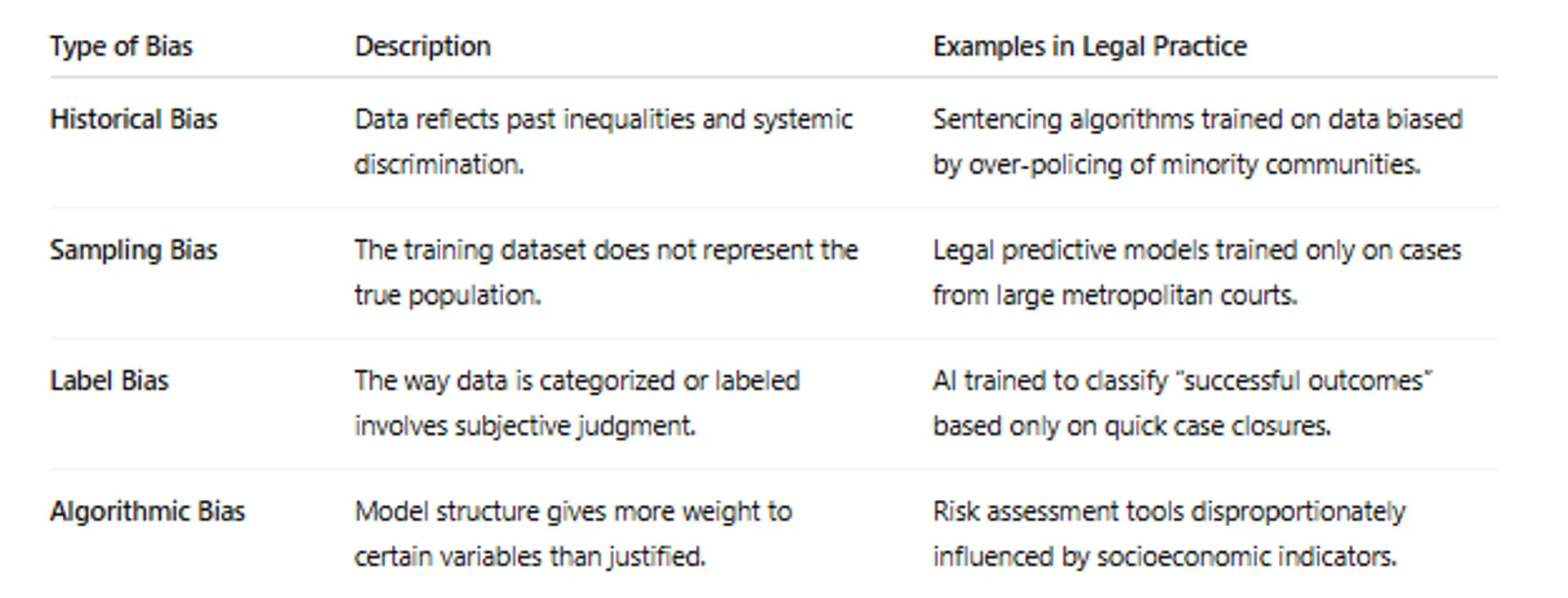

1.Understanding Bias in AI

AI systems do not think in a human sense—they learn patterns from existing data. If that data contains bias, the outputs will reflect and potentially amplify those biases.

Bias in AI is not always intentional. It arises naturally from:

Key Principle:

Biased data → biased model → biased decisions.

This poses constitutional risks involving equal protection, due process, and right to challenge evidence.

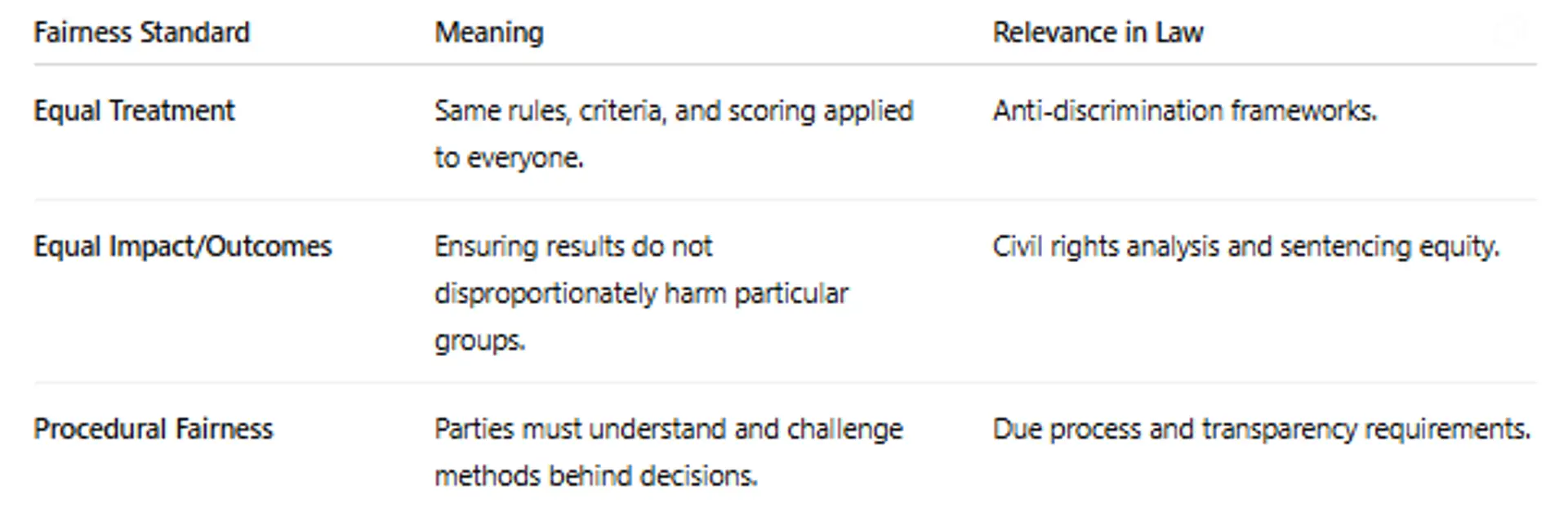

2.Fairness in Legal AI

Fairness is a context-dependent evaluation. Different legal processes may require different fairness standards.

Challenge:

Many AI systems are non-transparent (“black box models”), making them difficult to audit or challenge in court.

3.Reliability and Accuracy of AI Outputs

Lawyers are increasingly using AI for:

- Legal research

- Contract drafting

- Case outcome prediction

- Document summarization

- Due diligence review

However, reliability varies:

- Hallucinations: AI fabricates cases, citations, or legal principles.

- Overconfidence Bias: AI states uncertain conclusions as definitive.

- Context Errors: AI may misapply jurisdictional rules or procedural standards.

- Outdated Data: AI models may not reflect recent case law or statutory amendments.

Professional Risk:

Relying on incorrect AI-generated legal analysis without verification can constitute professional negligence.

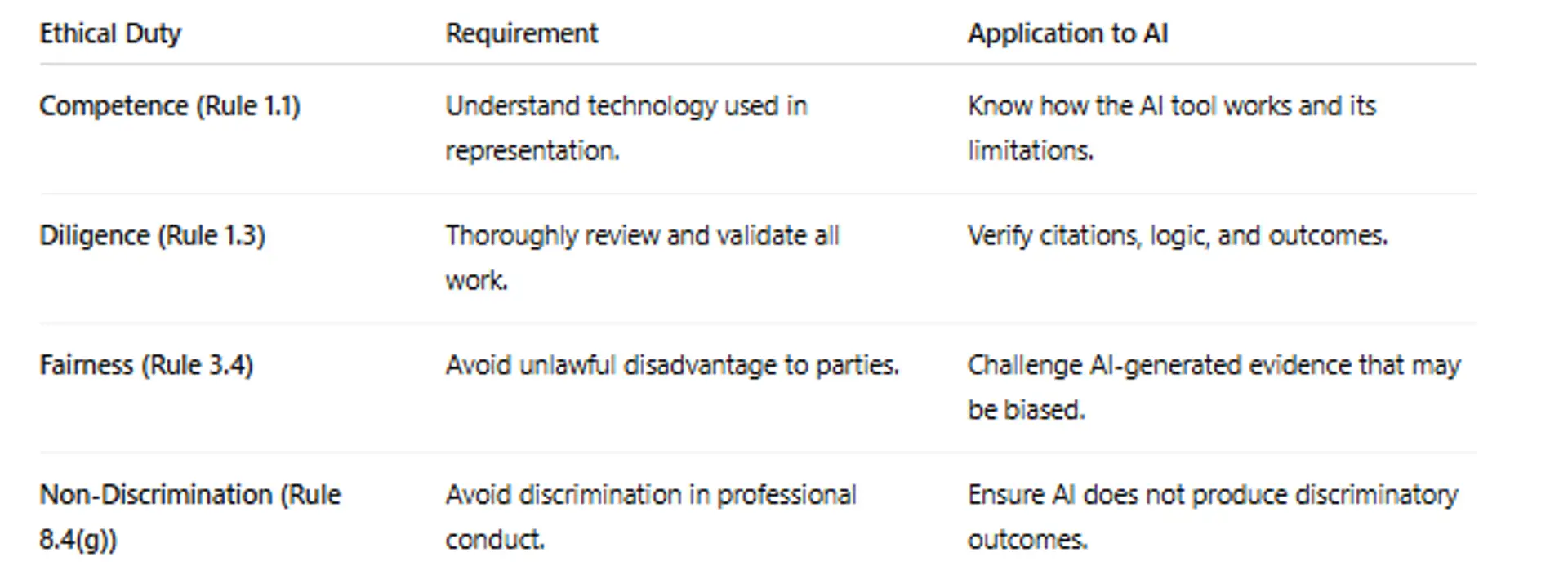

ABA Model Rule 1.1 (Competence) now explicitly includes technological competence.

4. Lawyer’s Ethical Duties When Using AI

Attorneys must:

Disclosure Requirement:

Courts increasingly require attorneys to disclose AI use or certify verification of AI-generated filings.

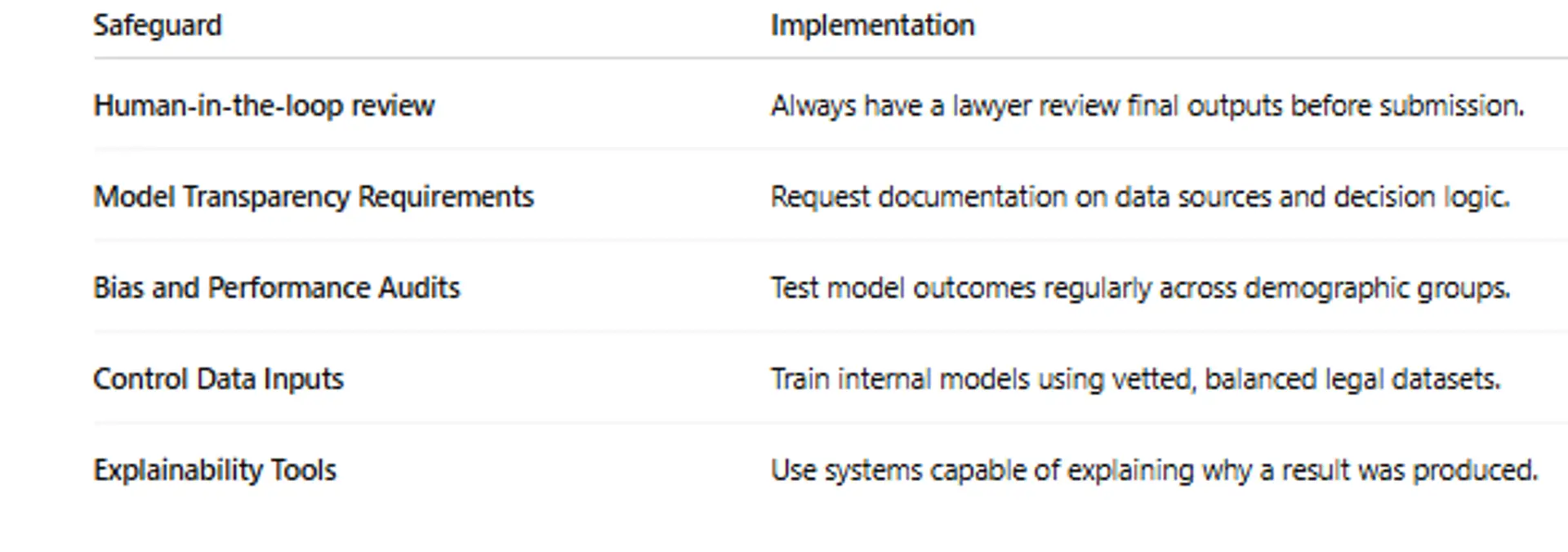

5. Safeguards to Ensure Responsible Use

To reduce risks:

Bottom Line:

AI can support, but never replace, final legal judgment and ethical responsibility.

Supplementary Learning Videos

- Data Bias The Root of Many AI Problems

- Why Is Algorithmic Bias A Problem In Data Mining? - AI and Machine Learning Explained

- What Does Fairness In AI Truly Mean? - AI and Machine Learning Explained

Lesson 5.3 Quiz — Bias, Fairness, and Reliability (8 Multiple Choice)

Please complete this quiz to check your understanding of Lesson 5.3: Bias, Fairness, and Reliability.

You must score at least 70% to pass this lesson quiz.

This quiz counts toward your final certification progress.

Click here for Quiz 5.3:

Conclusion

AI can improve efficiency in legal practice, but it must be used responsibly. Bias in data or model design can lead to unfair or unequal outcomes, and reliability issues—such as incorrect or fabricated information—require careful review. Lawyers must apply human judgment, verify AI outputs, and ensure that their use of technology aligns with ethical duties of competence, fairness, and due process. The goal is not just to use AI, but to use it in a way that supports justice and protects clients.

Next and Previous Lesson

Module 6: Integration, Firm Governance, and Future Developments

Lesson 6.1: Implementing AI in Individual and Firm Practices

Previous: Lesson 5.2: Confidentiality, Data Security, and Privilege

Course 3 -Mastering AI and ChatGPT for Legal Practice — From Fundamentals to Advanced Research and Ethical Use

Related Posts

© 2025 Invastor. All Rights Reserved

User Comments