Module 3: Legal Research and Case Law Verification Lesson 3.2: Verifying Case Citations and Detecting Fake Case Law

Module 3: Legal Research and Case Law Verification

Lesson 3.2: Verifying Case Citations and Detecting Fake Case Law

Learning Objectives

After completing this lesson, you will be able to:

- Verify the authenticity of cases cited by AI or other secondary sources.

- Identify red flags that suggest a legal case citation may be incorrect or fabricated.

- Confirm whether a case is still applicable or has been overturned, modified, or distinguished.

- Use official and reliable databases for checking case law.

1. Why Verification Matters

AI can generate case citations, but it may sometimes fabricate cases or cite outdated rulings. This mistake is known as:

Case Hallucination – where the AI produces a realistic-sounding but nonexistent case.

In legal work, using a fake or wrong citation can:

- Undermine credibility

- Mislead clients or colleagues

- Result in sanctions for unethical research practices

Therefore, every case citation must be verified manually.

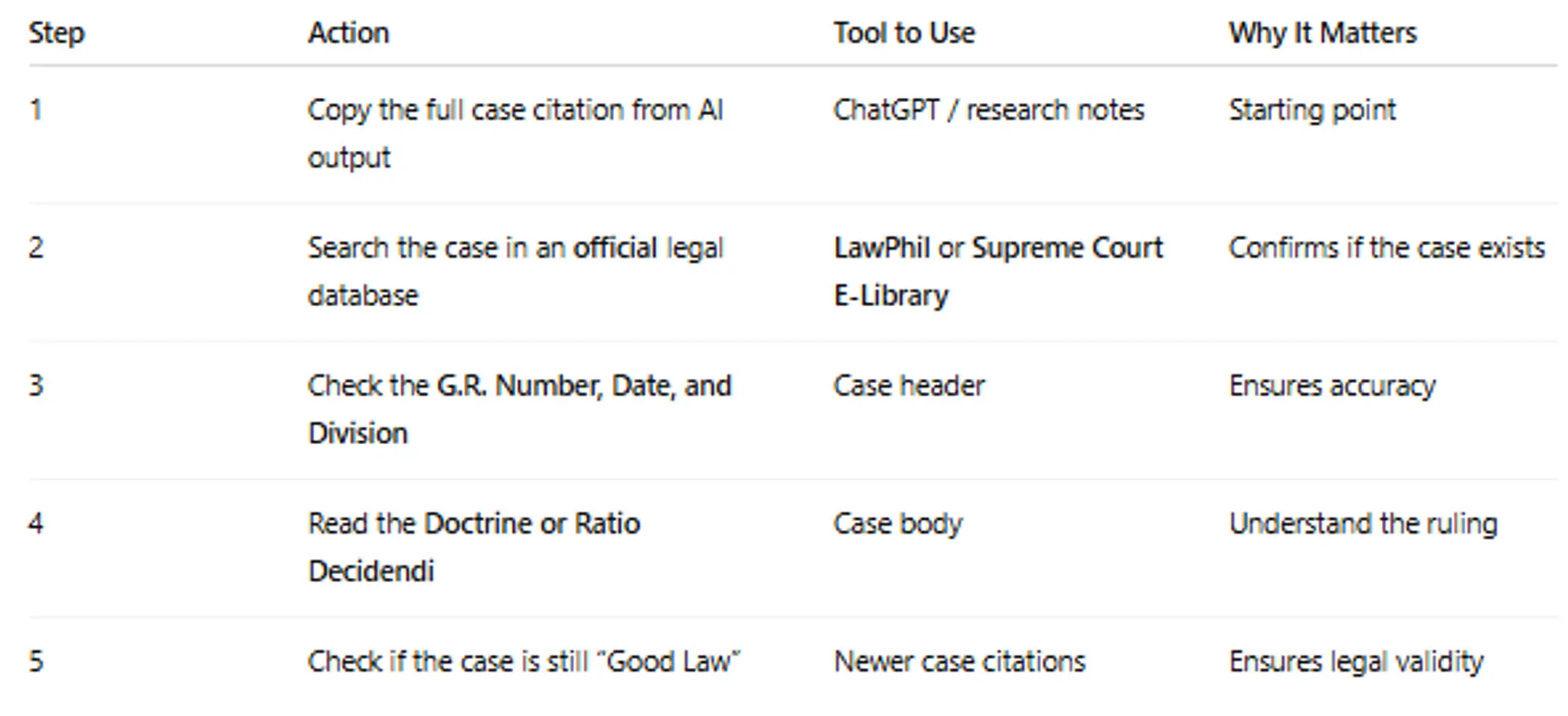

2. The Standard Case Verification Workflow

3. Trusted Legal Databases (Philippines)

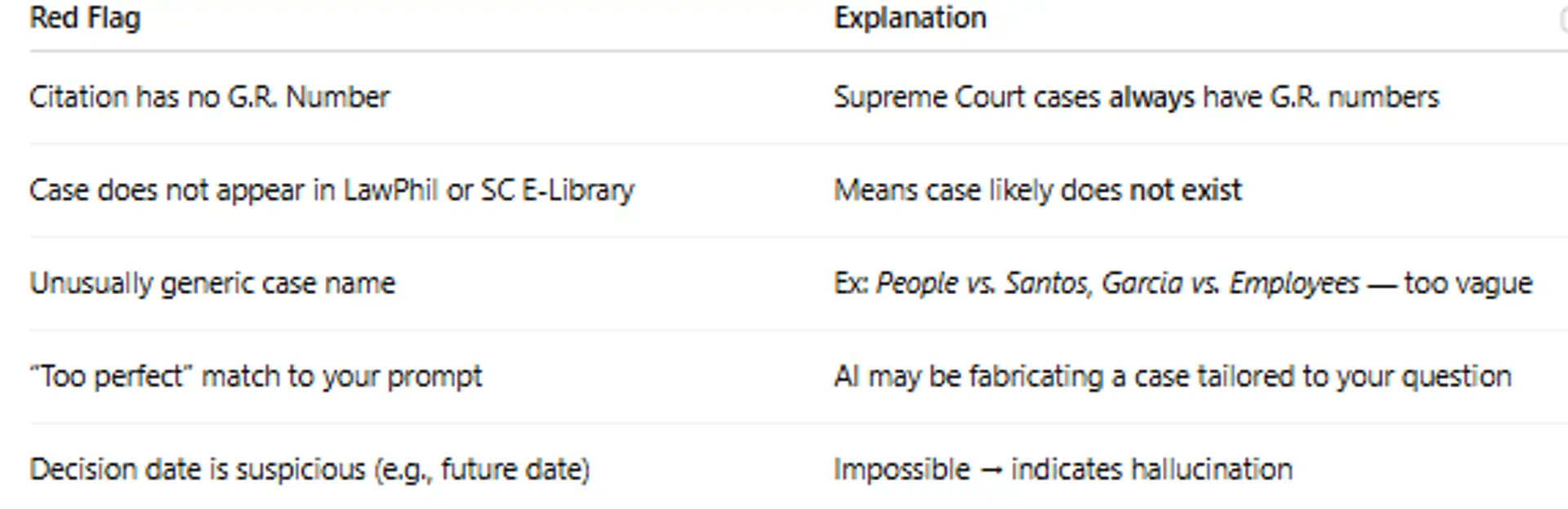

4. How to Identify Fake Case Citations Quickly

5. Checking if a Case is Still Good Law

Even if a case is real, it may have been:

- Overturned

- Modified

- Clarified

- Distinguished

How to Check:

Search the case title in Google Scholar (Case Law) or LawPhil, then look at later decisions citing it.

If later cases say “This ruling is no longer controlling” → the case is no longer good law.

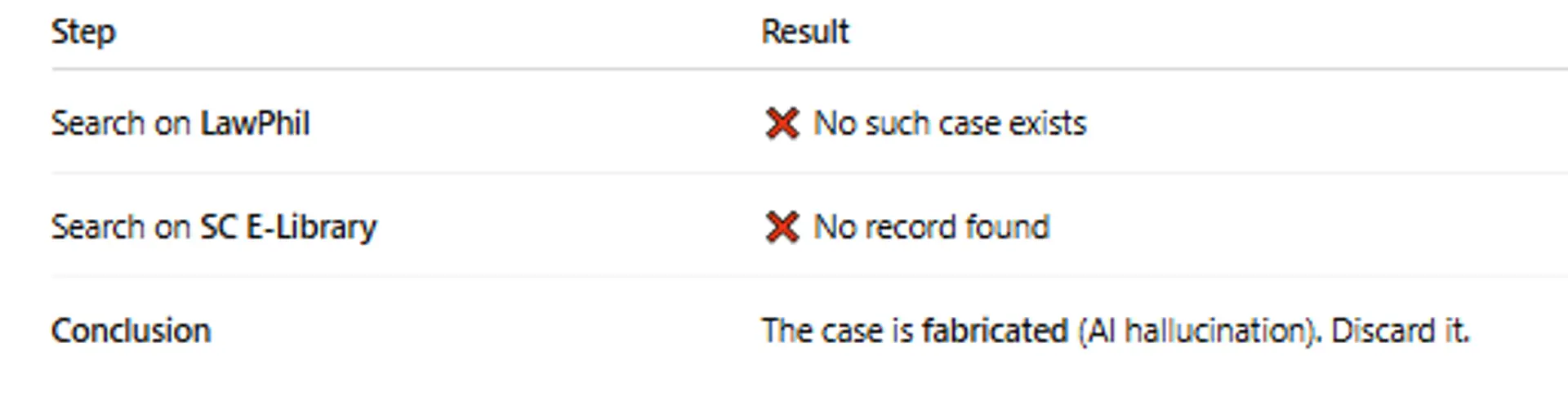

6. Example Case Verification Practice

AI Claims:

“The Supreme Court ruled in Garcia vs. ABC Company, G.R. No. 195847 (2019), that employee social media posts cannot justify termination.”

Verification:

7.Supplementary Learning Resource (Video)

Video:

- Fundamentals of Legal Citation Part 1: Case Law (Gallagher Basics series)

- Cracking the Code of Legal Citations: A Step-by-Step Guide to Properly Citing Legal Sources| LV

- Introduction to Legal Research with Westlaw

Lesson 3.2 Quiz

Case Law Verification

Please complete this quiz to check your understanding of Lesson 3.2.

You must score at least 70% to pass.

This quiz counts toward your certification progress.

Click here for Quiz 3.2

Conclusion

Verifying case law is a non-negotiable professional requirement.

AI can accelerate research, but it cannot replace the lawyer’s responsibility to confirm accuracy.

Proper verification protects:

- The client

- Your credibility

- The integrity of the legal process

AI suggests. The lawyer verifies. The court decides.

Next and Previous Lesson

Lesson 3.3: Using AI with Legal Databases (Lexis, Westlaw, Bloomberg)

Previous : Lesson 3.1 – Conducting AI-Supported Legal Research Correctly

Related Posts

© 2025 Invastor. All Rights Reserved

User Comments